Monitoring and Evaluation (M&E) - Wind Energy

WORK in PROGRESS

2031_Drillisch-Suisse-Eole-6-2-04; 20091216 Präsentation TERNA Wirkungen

Introduction

‘Increasing flexibility, up to date and reliable reporting, observing achievements of objectives, potential risks, changing and unintended impacts…’[1] - development programmes/projects are committed to pursue these (and other) requirements while, simultaneously, work in an efficient, effective and sustainable manner.

Monitoring & Evaluation supports the performance of programme’s work to achieve the demanded requirements. An M&E-System can help meeting requirements of flexibility, accountability, continuous observation and information plus delivering information to the efficiency, effectiveness and sustainability of a programme.

Definition & Objectives of results-based M&E

The OECD Glossary of Key Terms in Evaluation and Results Based Management defines Monitoring as “a continuing function that uses systematic collection of data on specified indi-cators to provide management and the main stakeholders of an ongoing development inter-vention with indications of the extent of progress and achievement of objectives and progress in the use of allocated funds” [2].

- Monitoring continuously observes what is happening because of development interventions and with what effects.

- Monitoring provides information if a development intervention is still on track while following anticipated results.

- Additionally, unintended effects of the intervention resp. changing conditions in an intervention’s environment.

Monitoring can hence be labelled as a tool for the “navigation” of a project that looks at the requirements of flexibility; it observes the achievements of a project, its potential risks and the changing and unintended impacts. As such results of Monitoring can provide information to further necessary activities in order to achieve the objective(s) of a project .

Evaluation is defined by the OECD Glossary of Key Terms in Evaluation and Results Based Management as “the systematic and objective assessment of an on-going or completed pro-ject, programme or policy, its design, implementation and results (…) to determine the rele-vance and fulfillment of objectives, development efficiency, effectiveness, impact and sus-tainability.” ([3]. While Monitoring “escorts” the project continuously,

- evaluation is done at certain stages in order to assess whether the aspired objectives to these certain stages have been met.

- Evaluation does not only look at the achievement itself (what has happened?) but as well at how this has happened (or not).

- Evaluation therefore addresses accountability and, at the same time, observes inputs, activities, outputs, use of outputs and benefits with regard to planned and achieved efficiency, effectiveness and sustainability of the development intervention.

- Evaluation can therefore emphasize legitimation of a project but also may provide information that can lead to alterations of the project’s concepts.

Monitoring & Evaluation joins the functions to provide a tool that supports meeting the above mentioned requirements. Pursuing M&E will combine the information provided by both functions with the intention of knowing what has happened and with which effects. The ‘how this has happened’ will provide lessons learned for ongoing activities and future projects.

Pursuing Results-based M&E follows GTZ’s Results-based Monitoring Guidelines, indicating to take in “the whole results chain, from inputs, via activities and outputs through to the out-comes and impacts”[4] I.e. M&E will observe the mentioned result chain, probably with the following objectives:

Objectives of M&E can be:

- provision of information to what has happened because of development intervention (effects) (– Efficiency of project, accountability to clients, public)

o What has happened?

o With which effects?

o Who is doing what?...

- How has this happened and why? (programme steering)

o How did we achieve XY? (lessons learned, best practices – knowledge management)

o Why did something not happen? (lessons learned, best practices – knowledge man-agement)

o Change of conditions?

- Assessment of project’s concept and objectives – poss. providing statements to alterations (legitimation, sustainability & significance; accountability to clients, public)

o Adequate baseline information (What is the starting point? Programme steering)

o Adapted strategies according to project’s environment (programme steering)

- Assessment of project’s inputs, activities, outputs, use of outputs, benefits – poss. providing statements to alterations (Effectiveness of inputs, activities in relation to outputs, use of outputs and benefits) (accountability to clients, public – knowledge management)

Simplified monitoring matrix

| Steps | Starting Point | Inputs | Activities | Output | Use of Output | Direct Result | Indirect Result |

| Key Questions for M&E | What is the state of art? | What resources have been delivered (financial, human, material, technological and information resources)? | What has been done with the resources? Actual activities/planned activities? | What is the output (because of the input of- and implemented activities with- resources)? Why? | Have outputs been utilized? How? Why? | What are the results that can directly be linked to project intervention (because of inputs, activities & outputs)? | In which areas does the project have impacts (as well) (because of the former pursued)? To what extent (presumably)? |

| To Do | Measuring state of art of structures, qualifications, skills, necessities, expectations etc. of e.g. responsible/addressed institutions for EE | Before-after comparison; documentation actual input and quantity/quality of inputs, assessment adequacy of service delivery | Comparison planned-actual activities; monitoring of implementation of activities | Following up conversion of activities into outputs; assessment of achievement (and efforts); check-up of Milestones | M&E of acceptance by clients, adequacy of quality and quantity of service delivery (needs of clients); check-up of Milestones | Measurement of indicators for direct benefit | (rather) assessment of contribution to indirect benefits; (pre-)determination of quantitative and qualitative contribution |

| M&E Source of Information and Procedure | BSL – to be developed (foundation for monitoring & evaluation) & based on already existing information | Identification of Project documents/communication (planned inputs –plans of operation) | Identification of Project documents/information/communication (planned activities - OPs) | To be developed (poss. identification of existing communication tools; usage of experiences from BSL, alongside OPs) | To be developed (usage of experiences from BSL, feedback, seminar evaluations) | tbd xxxx Poss. Following methodology & methods of BSL (evaluation of project)\" | |

| Aim of procedure |

BSL will be used for before-after-comparison for M&E; results of BLS can poss. be used for adaptation of necessary inputs | Documenting actual quantity & quality of input to follow up “offshoots” of inputs | Documenting and reviewing implementation of inputs | Efficiency of project intervention (ref. to evaluation) | Effectiveness of project interventions (ref. to evaluation) | Assessing efficiency, effectiveness and sustainability | Establishing links to MDG, AP2015; AGENDA21; |

| → We know from where we start | → We know what has been delivered and whether quantity and quality was adequate | → We know which activities have been implemented with the inputs and whether improvements are necessary | → We know what has happened and why (and why other - poss. expected - outputs did not happen) | → We know how outputs are utilized – in which manner, if services are accepted and why (why are some not utilized?) | → We know whether objectives have been achieved (what), why they have been achieved (lessons learned) and how | ||

| Possible information needs | Programme steering, For planning/review resources; for review of relevant impact areas & hypotheses / For donors – later verification of effectiveness of project intervention/accountability to clients, public | proof of inputs given (evaluating inputs & costs – budget); for verification; for monitoring to follow up result chain / accountability to clients, public (costs, quantity & quality) | Review and control input-activity / accountability to clients, public; statements to programme steering (improvements necessary?) | cost-benefit analysis; Best practice examples, accountability to clients, public; statements to programme steering, knowledge management | Adequacy of project intervention (legitimacy; monitoring) Results-based review of effects / accountability to clients, public; statements to programme steering (adaptation necessary?), knowledge management |

accountability to clients, public; statements to programme steering, knowledge management (providing lessons learned for following) |

Verification of contribution to MDG, development frameworks, best practices |

| Timeframe | usually before intervention or beginning of project | Depending on time frame developed by project; poss. going along with input-deliverance; Input-M&E as continuous task | In subsequence of input-monitoring & evaluation (not clearly dividable) Taking into account available resources for M&E | According to available resources (time, finances, staff) & addressed timeframe by project (poss. differentiation between short-,medium-,long-term indicators for outputs) Continuous task referring to milestones | According to available resources (time, finances, staff) & addressed timeframe by project (poss. differentiation between short-,medium-,long-term indicators for use of outputs) - taking into account timeframe of indicators and refers to milestones | End of project – evaluation – however already included in the monitoring!! (“impacts need preparation”) (will be addressed continuously while following up milestones; incl. in baseline; mid-term review) | End of project; some years after (ex-post-evaluation but as well indication of contribution poss. before) |

Exemplary Results from TERNA

Outputs - Country Studies

5 Auflagen seit 1999, 37 Länder

- Auflage 2007: 5 000 Downloads

- Informationsvermittlung für Investoren

- Hilfe bei der Identifizierung von Zielmärkten

- Beitrag zu Investitionsentscheidungen

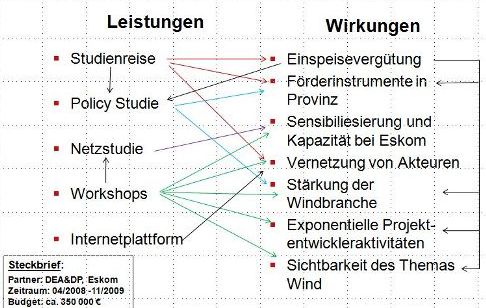

Outputs - Policy Advisory Services

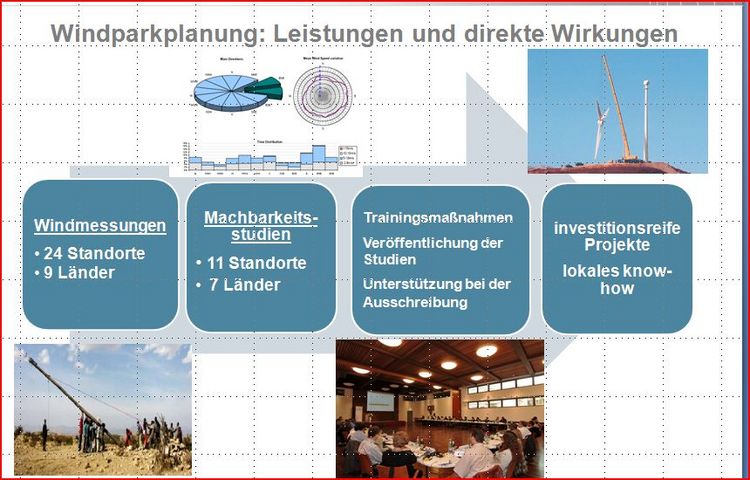

Outputs - Windpark Planning

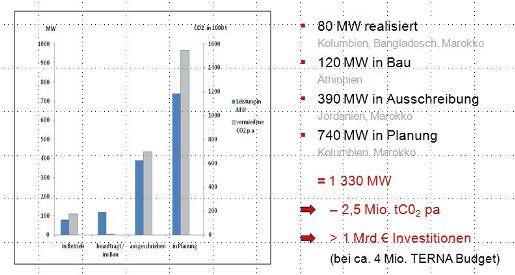

Indirect Results - Wind Park Planning

Higher aggregated development results in the energy sector

Armutsbekämpfung und Entwicklung

- Verbesserung der Lebensbedingungen

- Generierung von Einkommen

- Erhöhung der Versorgungssicherheit

- Verringerung der Abhängigkeit vom Öl

Klima- und Umweltverträglichkeit

- Effizienzsteigerung

- Verbreitung erneuerbarer Energien

- ↑ . BMZ/GTZ 2003: Handreichung zur Bearbeitung von AURA-Angeboten. BMZ-Referate 220 und 222, GTZ-Stabstelle 04 Unternehmensentwicklung, OE 042 Interne Evaluierung

- ↑ OECD 2002: Glossary of Key Terms in Evaluation and Results Based Management. pp. 27

- ↑ id. pp. 21

- ↑ GTZ 2008: Results-based Monitoring. Guidelines for Technical Cooperation. Eschborn

- ↑ cartoon: GTZ 1996: PIM - Booklet 4: The concept of participatory impact monitoring by Eberhard Gohl / Dorsi German. Eschborn, p. 10